Airflow with ArgoCD, kustomize, and Helm. Introducing CICD for our Data Scientist Team

Deploy Airflow on ArgoCD using Airflow’s Helm chart, manage deployment with kustomize, fully automated Airflow CICD. Introducing CICD for…

Airflow with ArgoCD, Kustomize, and Helm — Introducing CI/CD for Our Data Scientist Team

Some prerequisites for following this blog post, include:

- Basic knowledge of K8s and ArgoCD. I recommend this video to get a landscape of what ArgoCD is.

- Basic knowledge of Kustomize.

Here is the repository for deploying Airflow on ArgoCD.

Before you start using the repository, you need to have:

- A running K8s cluster. If you want to get a light-weight K3s cluster up and running, you can check out this online tutorial.

- If your use case requires a customized Airflow image, that is outside the scope of this article.

The Benefits of Today’s Tools

- Why Kustomize?

Airflow comes with its nice helm chart, and our team has been using this helm chart for a while to play with Airflow. However, as we are pushing Airflow to production, we need a way to configure an Airflow cluster for development use, and a cluster for production use.

We hope the 2 clusters can accept different configurations on ingress routes, passwords, worker replica, … etc. Using 2 values.yaml files doesn’t seem to be the most elegant approach and not as convenient when we are deploying with ArgoCD. Using different branches also creates problems and results in a bunch of merge conflicts over time. Kustomize seems to solve this deployment configuration issue quite well, and forces us to continue this infrastructure as code culture.

2. Why use ArgoCD?

ArgoCD’s declarative gitops enables a K8s native CD workflow. Without the need to store K8s credentials in our pipeline server (either GitHub or GitLab), our credential management becomes a bit easier.

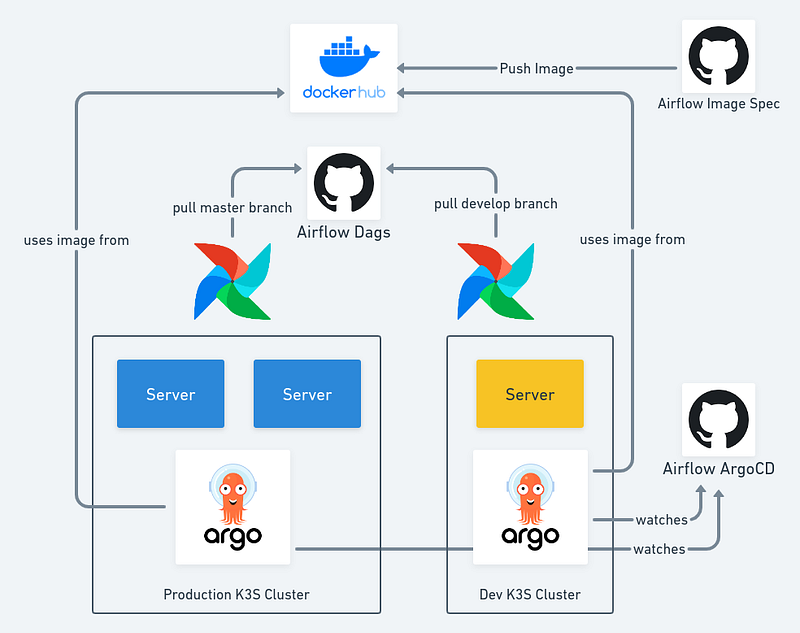

Architecture and Scenario

Scenario Walk Through:

- When a data scientist realizes that he/she needs a new dependency from Airflow, they update Airflow image spec, which triggers an automatic build and push new image to Docker Hub (checkout this post for detail).

- After they have the new image, they can use this image to continuously develop the dags in their own dags repository and create pr on dags repository’s develop branch.

- Before the dag is deployed, data scientists update Airflow ArgoCD repository to point airflow image to the new image version.

- ArgoCD picks up the update automatically and updates the develop environment’s Airflow.

- Data scientist finally merge their code to the develop branch of dags repository.

- Develop environment’s Airflow picks up dag change, and we are able to run tests on develop environment.

- After all tests are done, deploy Airflow to production, and merge dags repository’s develop into master branch.

Making Airflow Work on ArgoCD

- ArgoCD doesn’t work natively with kustomize + Helm chart (i.e.,

ksutomize --enable-helm), so we need to install a plugin to make yaml file from helm chart. See README for installation instruction. In the plugin, we basically customized yaml spec generation command bykustomize build --enable-helmcommand. - So the approach we took is, we use Kustomize as the main configuration management tool, so we are translating helm chart to Kustomize. In order to do this, we installed a plugin to ArgoCD. If you know of a more elegant approach, please let me know, your suggestions would be much appreciated.

- In

overlaysfolder, that’s where we make Airflow’s gitSync pull from different branches based on deployment environments. base/kustomizationis where we translate helm chart’s job hook related flag to ArgoCD’s hook. Since we are not deploying this with helm but Kustomize, we need these flags so that ArgoCD know when to start these jobs, and how to handle the hook delete policy.- For detailed steps, please visit the repository’s README file.

And with that, you’ve crossed another level to becoming a boss coder. GG! 👏

I hope you found this article instructional and informative. If you have any feedback or queries, please let me know in the comments below. And follow SelectFrom for more tutorials and guides on topics like Big Data, Spark, and data warehousing.

The world’s fastest cloud data warehouse:

When designing analytics experiences which are consumed by customers in production, even the smallest delays in query response times become critical. Learn how to achieve sub-second performance over TBs of data with Firebolt.