What’s the Fuss About MCP AI Agent

(This is a chat gpt tranlated article from…

(This is a chat gpt tranlated article from: https://medium.com/@schwanndenkuo/mcp-ai-agent%E5%9C%A8%E7%B4%85%E4%BB%80%E9%BA%BC-e7fd2523c749)

(If you would rather like to start building your own AI agent, checkout: AI Agent 0 到 1: MCP Server with Goose)

Background Story

As large language models (LLMs) like ChatGPT, Claude, and Gemini continue to evolve and become more powerful, the question arises: where is AI headed in the future? Tech industry leaders such as Andrew Ng, Elon Musk, and Jensen Huang have all pointed out that “AI Agents will be the next major milestone.” But what exactly is an AI Agent?

With more and more LLM providers offering advanced models and applications, we naturally wonder: is there an open and universal protocol that allows us to create AI Agents freely without being locked into a specific platform or provider?

Limitations of LLMs

When we use LLMs like ChatGPT or Claude, we can ask questions and have conversations, but they can’t actually “do” anything. For example, you can’t directly ask ChatGPT to book a restaurant, send an email, or fetch a report from your home NAS.

This is where AI Agents come into play. An AI Agent acts like a personal assistant that not only converses with you but also takes real actions based on your needs. For instance, if you want to know when a particular colleague last logged into your company’s system last month, a standard LLM wouldn’t be able to help because it lacks access to your company’s internal data. However, an AI Agent can accomplish this by calling the company’s internal APIs or querying the database to retrieve the relevant information instantly.

Another limitation of LLMs is that their responses are confined to their pre-trained knowledge. If you ask about a breaking news event or specific business data within your company, an LLM will often be clueless. To address this, we might use Retrieval-Augmented Generation (RAG), which allows an LLM to “look up” additional data before responding. However, RAG works best when dealing with relatively static, infrequently changing data. For real-time or dynamic requests — such as checking the current shipping status of a customer’s order — RAG can be slow and costly.

On the other hand, an AI Agent functions like a real-world assistant that can handle tasks dynamically. If you ask, “Where is my order #12345 right now?” the AI Agent can immediately call your company’s logistics system API to provide a real-time, accurate response.

Summary

- General LLMs act like “knowledgeable encyclopedias” that provide answers based on their training data but cannot take action.

- RAG-enhanced LLMs function as “encyclopedias with a database,” useful for retrieving external data but not ideal for real-time scenarios.

- AI Agents work like “assistants that can take action,” using tools and APIs to solve specific, real-time problems.

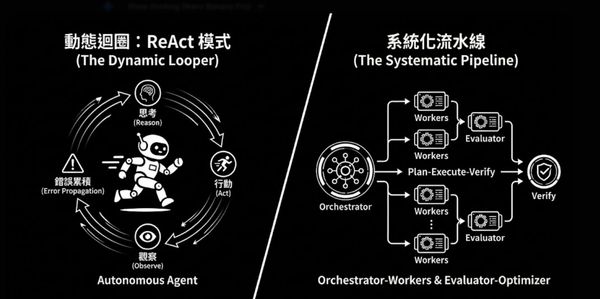

Limitations of AI Agents

- Interoperability Issue:

With the rise of multiple LLM providers like Claude, Gemini, and OpenAI, AI-powered applications have diversified — from chatbots like ChatGPT to intelligent IDEs like Cursor. However, ensuring that an AI Agent can seamlessly integrate across different platforms is challenging. Ideally, we need a standardized protocol that allows an AI Agent to work flexibly across various platforms without being tied to a specific model or service provider. - Limited Adaptability for Automation:

Traditional AI Agents are designed primarily for human users — meaning they operate step by step based on user commands or predefined workflows. This limits their flexibility when used by other machines (such as client applications or automation services). Ideally, applications should be able to dynamically select and orchestrate different AI Agent tools based on real-time needs, rather than following rigid, pre-scripted sequences.

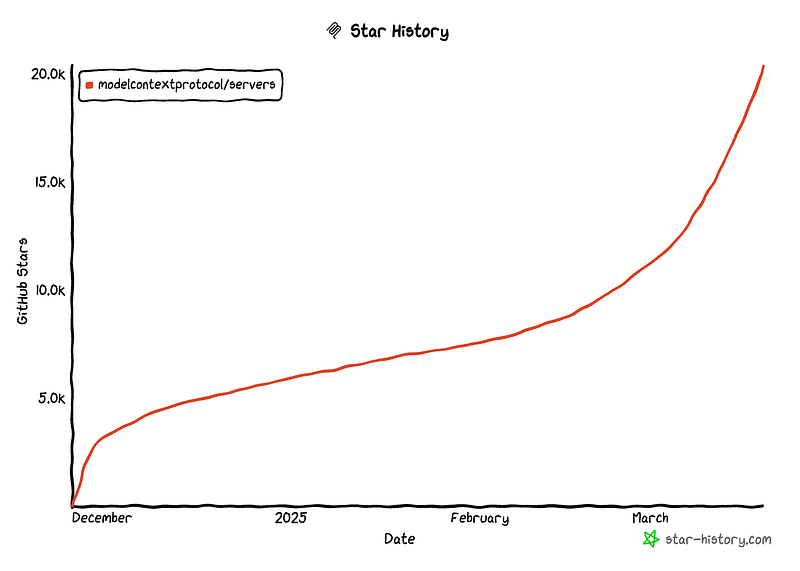

How MCP (Anthropic’s Model Context Protocol) Solves These Issues

MCP introduces a standardized client-server architecture that effectively addresses these challenges. Developers can focus on creating independent function modules, known as MCP Servers, which handle specific tasks such as sending emails, managing calendars, or performing image recognition. Meanwhile, applications that integrate with LLMs — such as Claude Chatbot or intelligent IDEs like Cursor — act as MCP Clients. These MCP Clients can dynamically explore and identify available functionalities provided by MCP Servers, selecting the most appropriate tools for complex tasks.

This structured collaboration makes AI Agents more interoperable, easier for machines to understand, and seamlessly automatable, enhancing the flexibility and efficiency of the entire AI ecosystem.

Real-World Workflow Example

Imagine you’ve built an AI customer service chatbot using MCP that integrates two tools:

- Order Tracking Tool — Connects to your company’s database to check order status based on a provided order number.

- Coupon Issuance Tool — Dynamically decides whether to issue discount coupons if a customer is dissatisfied.

Scenario: A Customer Inquiry

A customer asks the AI chatbot:

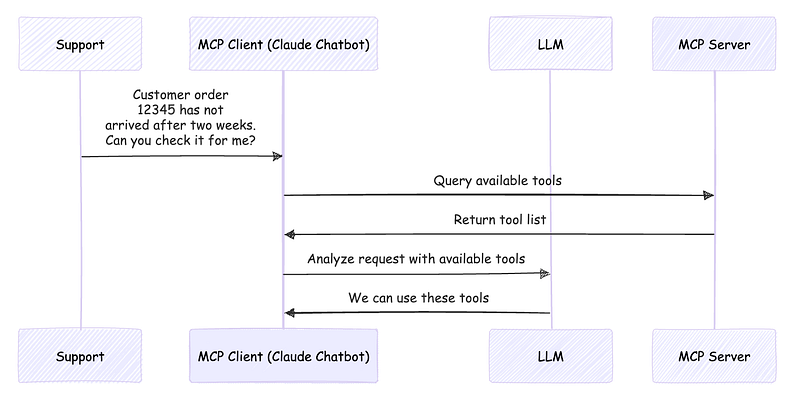

“My order #12345 has been delayed for two weeks. Can you check on it for me?”

- MCP Client (e.g., Claude Chatbot) analyzes the request and determines that it needs external tools to complete the task.

- It dynamically queries the MCP Server to check what tools are available.

- The MCP Server responds with a list of available tools and how to use them.

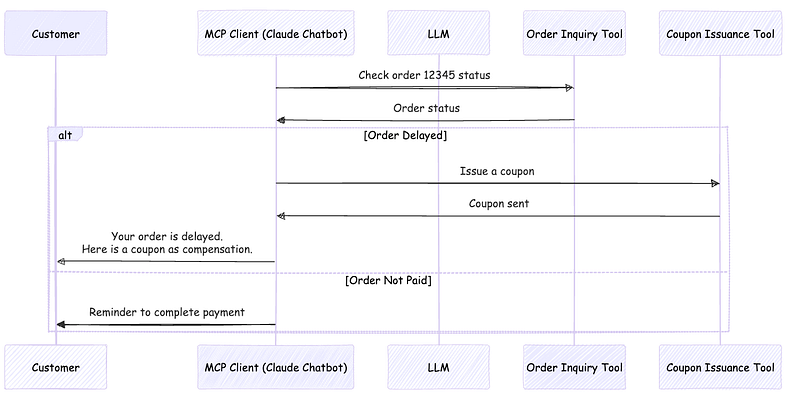

- The AI decides to first call the Order Tracking Tool, using order number #12345 to check the status.

- Suppose the response indicates “Shipment delayed, not yet dispatched.”

- The LLM understands that the customer might be dissatisfied, so it calls the Coupon Issuance Tool to offer compensation.

- The AI chatbot responds: “Your order is delayed due to a logistics issue. We sincerely apologize for the inconvenience. As a token of our apology, we’re offering you a discount coupon.”

Now, if the order status instead showed “Payment not completed.”

- The AI would not issue a coupon since the issue isn’t a shipping delay.

- Instead, it would respond: “Your order is awaiting payment. Please complete the payment, and we will process the shipment immediately. Thank you!”

In this scenario, we didn’t need to manually code logic for every possible situation. By simply providing MCP Server tools, the LLM itself can dynamically decide which tools to use to resolve issues intelligently.

Frequently Asked Questions (FAQ)

Q: Who developed MCP?

A: MCP was introduced by Anthropic as a protocol that enables AI models to interact more flexibly with external tools. However, MCP is not exclusive to Anthropic’s AI models (e.g., Claude) but is designed as an open, vendor-agnostic standard that any AI model can use.

Q: How does MCP work?

A: The workflow follows these steps:

- The MCP Client (e.g., Claude, Goose, etc.) sends a request to the MCP Server.

- The MCP Server responds with available tools, resources, and prompts.

- The MCP Client determines which tools to use and what data to provide to the AI model to fulfill the user request.

This allows AI to dynamically select and combine different tools to complete tasks more efficiently.

Q: Is MCP limited to Claude?

A: No. While Claude Desktop is an MCP Client developed by Anthropic, MCP itself is not tied to any single AI vendor. For example, Goose is a rapidly growing MCP Client that supports integration with various LLM APIs and features an MCP Server marketplace offering a variety of tools.

Q: Are there security risks with MCP? How can we ensure its security?

A: MCP does not require sending sensitive data to AI models by default. Security depends on how MCP Servers manage data transmission. Best practices include:

- Access control: Restrict API and tool access to prevent data leaks.

- API key management: Securely store and manage API keys to prevent unauthorized access.

- Monitoring and auditing: Track API calls and data access to detect and mitigate security risks.

By following these practices, MCP can be implemented securely while maintaining data integrity.